Blocklists in the ActivityPub fediverse

Part 2 of "Golden opportunities for the fediverse – and whatever comes next"

Originally published November 2023, last updated January 2025 – see the update log for details.

Note: if you're coming here from Bluesky, make sure to read the section on Widely shared blocklists can lead to significant harm – which applies to composable moderation as well. Bluesky currently also relies on blocklists, at the individual level instead of instance-level blocking, so while details are different, much of the rest of the article is hopefully relevant as well!

This installment of the multi-part series on Golden opportunities for the fediverse – and whatever comes next has five sections:

- Blocklists

- Widely shared blocklists can lead to significant harm

- Blocklists potentially centralize power -- although can also counter other power-centralizing tendencies

- Today's fediverse relies on instance blocking and blocklists

- Steps towards better blocklists

But first, a quick recap of Mastodon and today’s ActivityPub Fediverse are unsafe by design and unsafe by default – and instance blocking is a blunt but powerful safety tool:

- Today's ActivityPub Fediverse is unsafe by design and unsafe by default – especially for Black and Indigenous people, women of color, LGBTAIQ2S+ people1, Muslims, disabled people and other marginalized communities.

- Instance blocking is a blunt but powerful tool for improving safety

- Defederating from a few hundred "worst-of-the-worst" instances makes a huge difference in reducing harassment and abuse,

- Blocking or limiting large loosely-moderated instances (that are frequent sources of racism, misogyny, and transphbobia means even less harassment and bigotry (as well as less spam)

If terms like instances, blocking, defederating, limiting, or the fediverse are new to you (as they are to 99.9% of the world), you mayt want to read Mastodon and today’s fediverse are unsafe by design and unsafe by default before this post!

Update, May 2025: Recent papers "A Blocklist is a Boundary": Tensions between Community Protection and Mutual Aid on Federated Social Networks (by Erika Melder, Ada Lerner, and Michael Ann DeVito) and Understanding Community-Level Blocklists in Decentralized Social Media (by Owen Xingjian Zhang, Sohyeon Hwang, Yuhan Liu, Manoel Horta Ribeiro, and Andrés Monroy-Hernández) are excellent academic research about blocklists

Instance blocking is a critical tool for admins and moderators who want to limit the amount of hate speech and harassment the people on their instances are explosed to. But with 20,000 instances in the ActivityPub Fediverse, how to know which ones are bad actors that should be defederated or so loosely moderated that they should be limited? In #FediBlock and receipts, I discussed the current mechanisms – including the #FediBlock hashtag – and their limitations. And with hundreds of problematic instances out there, blocking them individually can be tedious and error-prone – and new admins often don't know to do it.

The FediHealth project, from 2019, started to address this problem by providing suggestions of instances to follow and block based on policies PeerTube and Pleroma instances "whose federation policies you trust." Starting in early 2023, Mastodon began providing the ability for admins to protect themselves from hundreds of problematic instances at a time by uploading blocklists (aka denylists): lists of instances to suspend or limit.

Terminology note: blocklist or denylist is preferred to the older blacklist and whitelist, which embed the racialized assumption that black is bad and white is good. Blocklist is more common today, but close enough to "blacklist" that denylist is gaining popularity.

Starting in 2023, shared blocklists from Seirdy (Rohan Kumar), Gardenfence, and Oliphant all gained significant adoption, as did IFTAS' Do Not Interact (DNI) and CARIAD in 2024. Here's how Seirdy's My Fediverse blocklists describes the 140+ instances on his FediNuke.txt blocklist:

"It’s kind of hard to overlook how shitty each instance on the FediNuke.txt subset is. Common themes tend to be repeated unwelcome sui-bait2 from instance staff against individuals, creating or spreading dox materials against other users, unapologetic bigotry, uncensored shock content, and a complete lack of moderation."Seirdy's Receipts section gives plenty of examples of just how shitty these instances are. For froth.zone, for example, Seirdy has links including "Blatant racism, racist homophobia", and notes that "Reporting is unlikely to help given the lack of rules against this, some ableism from the admin and some racism from the admin." Seirdy also links to another instance's "about" page which boasts that "racial pejoratives, NSFW images & videos, insensitivity and contempt toward differences in sexual orientation and gender identification, and so-called “cyberbullying” are all commonplace on this instance." Nice.

Blocklists aren't limited to the shittiest instances. Sierdy also provides a Tier 0 list of about 380 instances, described as "a good starting point for your instance’s blocklist". Oliphant.Social Mastodon Blocklists and Seirdy's Supplemental Blocklists highlight a range of other approaches, including some that focus on bridges to other networks, specific software platforms or instances that have open registrations.

Many widely-adopted blocklists take an algorithmic approach:

- Gardenfence is a pure "consensus" (or "aggregate") blocklist combining the results of multiple blocklists, with some threshold for inclusion; Oliphant's older tier0, tier1, tier2, and unified-max blocklists also took this approach.

- Seirdy by contrast uses blocklists from multiple "bias sources" as an initial step in the process, and then applies overrides to produce the "semi-curated" Tier0 and curated FediNuke.txt. IFTAS' CARIAD also includes a manual review step.

- Oliphant's current _unified_tier0_blocklist.csv combines blocks from Seirdy's Tier0, Gardenfence, and IFTAS DNI, resulting in a list slightly longer than Seirdy's.

- FediSeer provides a tool for combining the "censured lists" of multiple instances.

On the one hand, a purely algorithmic approach reduces the direct impact of bias and mistakes by the person or organization providing the blocklist. Then again, it introduces the possibility of mistakes or bias – by one or more of the sources, when selecting which sources to use, or the algorithm combining the various blocklists. Seirdy's Mistakes made analysis documents a challenge with this kind of approach.

"One of Oliphant’s sources was a single-user instance with many blocks made for personal reasons: the admin was uncomfortable with topics related to sex and romance. Blocking for personal reasons on a personal instance is totally fine, but those blocks shouldn’t make their way onto a list intended for others to use....

Tyr from pettingzoo.co raised important issues in a thread after noticing his instance’s inclusion in the unified-max blocklist. He pointed out that offering a unified-max list containing these blocks is a form of homophobia: it risks hurting sex-positive queer spaces."

Requiring more agreement between the sources reduces the impact of bias from any individual source – although decisions by multiple instances aren't necessarily completely independent,3 so there can still be issues. And requiring more agreement also increases the number of block-worthy instances that aren't on the list. Gardenfence, for example, requires agreement of six of its seven sources, and has 136 entries; as the documentation notes, "there are surely other servers that you may wish to block that are not listed." Leaving instances off lists can also disproportionately affect people who are likely to be harassed; IFTAS, for example, notes that

"CARIAD does not protect LGBTQ, BIPOC, BAME or other marginalised communities."

Any blocklist, for an individual instance or intended to be shared between instances, reflects the creators' perspectives on this and dozens of other ways in which there isn't any fediverse-wide agreements on when it's appropriate to defederate or silence other instances. Any blocklist by definition takes a position on all these issues, so (as Seirdy notes) no matter what blocklist you're starting with

"if your instance grows larger (or if you intend to grow): you should be intentional about your moderation decisions, present and past. Your members ostensibly trust you, but not me."

Any blocklist is likely to be supported by those who have similar perspectives as the creators – and vehemently opposed by those who see things differently. And some people vehemently oppose the entire of idea of blocklists, either on philosophical grounds or because of the risks of harms and abuse of power.

Widely shared blocklists can lead to significant harm

Blocklists have a long history. Usenet killfiles have existed since the 1980s, email DNSBLs since the late 90s, and Twitter users created The Block Bot in 2012. The perspective of a Block Bot user who Saughan Jhaver et al quote in Online harassment and content moderation: The case of blocklists (2018, PDF here) is a good example of the value blocklists can provide to marginalized people:

"I certainly had a lot of transgender people say they wouldn’t be on the platform if they didn’t have The Block Bot blocking groups like trans-exclusionary radical feminists –TERFs.”

That said, widely-shared blocklists introduce risks of major harms – harms that are especially likely to fall on already-marginalized communities.

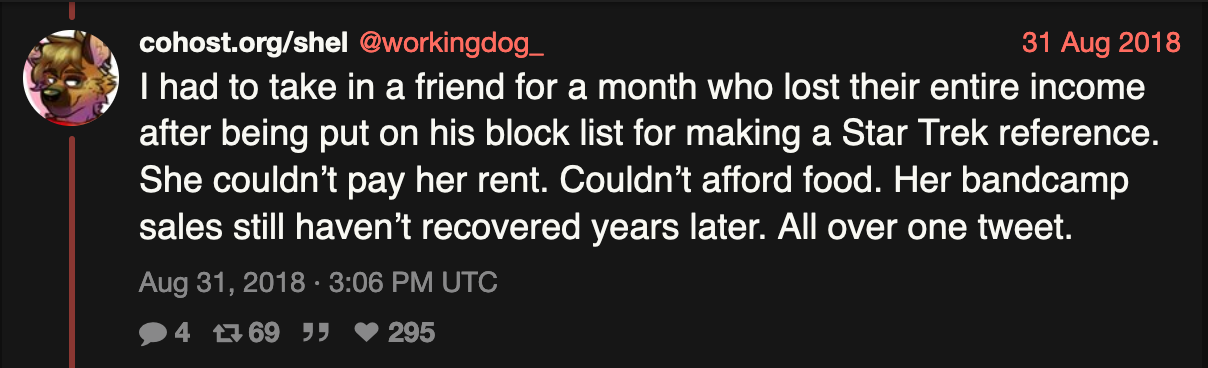

workingdog's thread from 2018 (written during the Battle of Wil Wheaton) describes a situation involving a different Twitter blocklist that many current Mastodonians had first-hand experience with: a cis white person arbitrarily put many trans people who had done nothing wrong on his widely-adopted blocklist of "most abusive Twitter scum" and as a result ...

"Tons of independent trans artists who earn their primary income off selling music on bandcamp, Patreon, selling art commissions, etc. got added to this block list and saw their income drop to 0. Because they made a Star Trek reference at a celebrity. Or for doing nothing at all.

Many Black people were on Wheaton's blocklists as well, for making a Star Trek reference or for doing nothing at all, with equally severe impacts. An earlier blocklist that Wheaton had used as a starting point similarly had many trans and Black people on it just because the blocklist curator didn't like them.

Email blocklists have seen similar abuses; RFC 6471: Overview of Best Email DNS-Based List (DNSBL) Operational Practices (2012) notes that

“some DNSBL operators have been known to include "spite listings" in the lists they administer -- listings of IP addresses or domain names associated with someone who has insulted them, rather than actually violating technical criteria for inclusion in the list.”

And intentional abuse isn’t the only potential issue. RFC 6471 mentions a scenario where making a certain mistake on a DNSBL could lead to everybody using the list rejecting all email ... and mentions in passing that this is something that's actually happened. Oops.

"The trust one must place in the creator of a blocklist is enormous; the most dangerous failure mode isn’t that it doesn’t block who it says it does, but that it blocks who it says it doesn’t and they just disappear."

– Erin Sheperd, A better moderation system is possible for the social web

"Blocklists can be done carefully and accountably! But almost none of them ARE."

– Adrienne, a veteran of The Block Bot

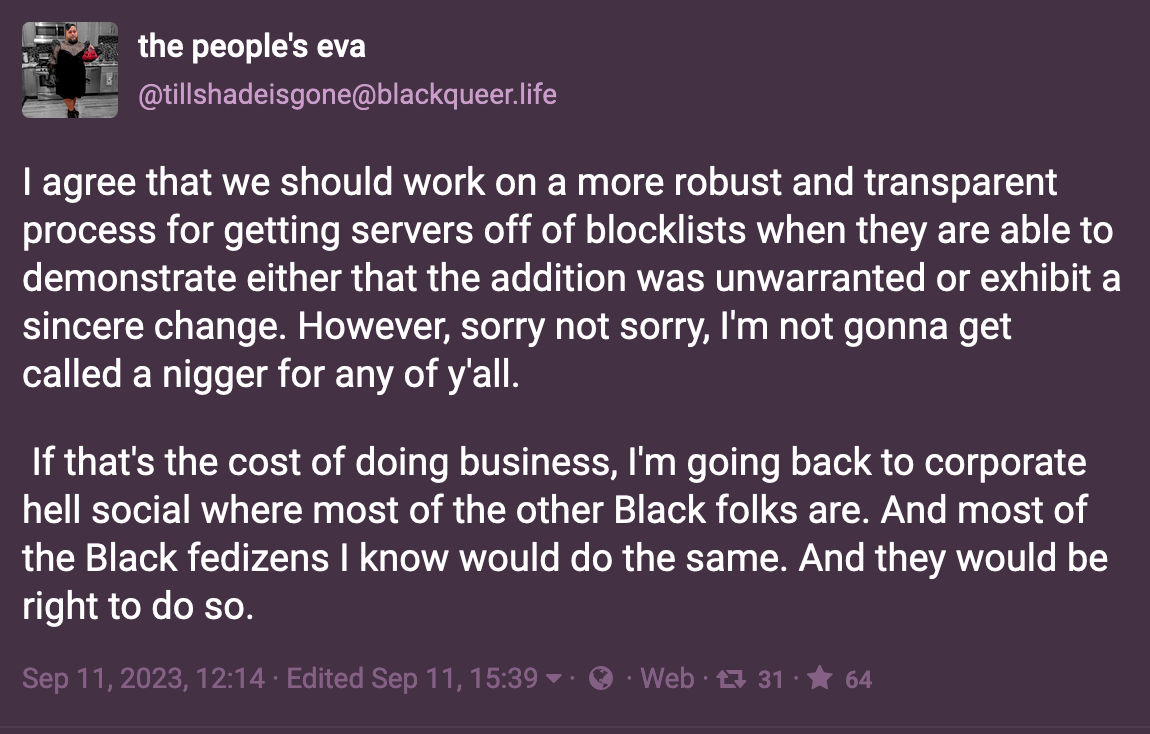

People involved in creating and using blocklists can take steps to limit the harms. An oversight and review process can reduce the risk of instances getting placed on a blocklist by mistake – or due to the creators' biases. Appeals processes can help deal with the situation when they almost-inevitably do. Sometimes problematic instances clean up their act, so blocklists need to have a process for being updated. Admins considering using a blocklist can double-check the entries to make sure they’re accurate and aligned with their instances’ norms. The upcoming section on steps towards better blocklists disucsses these and other improvements in more detail.

However, today's fediverse blocklists often have very informal review and appeals processes – basically relying on instances who have been blocked by mistake (or people with friends on those instances) to surface problems and kick off discussions by private messages or email. Some, like Seirdy's FediNuke, make it easier for admins to double-check them by including reasons and receipts (links or screenshots documenting specific incidents) for why instances appear on them. Others don't, or have only vague explanations ("poor moderation") for most instances.

To be clear, as #FediBlock and receipts discusses, receipts aren't a panacea.

"Some of the incidents that lead to instances being blocked can be quite complex, so receipts are likely to be incomplete at best. As Sven Slootweg points out, "a lot of bans/defederations/whatever are not handed out for specific directly-observable behaviour, but for the offender's active refusal to reflect or reconsider when approached about it," which means that to understand the decision "you actually need to do the work of understanding the full context."

In other situations, providing receipts can open up the blocklist maintainer (or people who had reported problems or previously been targeted) to legal risk."

And even when receipts exist and are available, it’s likely to take an admin a long time to check all the entries on a blocklist – and there are still likely to be disagreements about how receipts should be interpreted.

Still, despite their limitations, receipts can be useful in many circumstances; I (and others) frequently look at to Seirdy's list. Other blocklists have room for improvement on this front.

Blocklists potentially centralize power – although can also counter other power-centralizing tendencies

"the blocklist/allowlist path for the fediverse is a band-aid. it's a doomed approach to moderation, and it always converges on centralization as it continues to run."

– Christine Lemmer-Webber, 2022. OcapPub: Towards networks of consent goes into more detail

Another big concern about widely-adopted blocklists is their tendency to centralize power. Imagine a hypothetical situation where every fediverse instance had one of a small number of blocklists that had been approved by a central authority. That would give so much power to the central authority and the blocklist curator(s) that it would almost certainly be a recipe for disaster.

And even in more less-extreme situations, blocklists can centralize power. In the email world, for example, blocklists have contributed to a situation where despite a decentralized protocol a very small number of email providers have over 90% of the installed base. You can certainly imagine large providers using a similar market-dominance technique in the fediverse. Even today, I've seen several people suggest that people should choose large instances like mastodon.social which many regard as "too big to block." And looking forward, what happens if and when Facebook parent company Meta's new Threads product adds fediverse integration?

Then again, blocklists can also counter other centralizing market-dominance tactics. Earlier this year, for example, Mastodon BDFL (Benevolent Dictator for Life) Rochko changed the onboarding of the official release to sign newcomers up by default to mastodon.social, the largest instance in the fediverse – a clear example of centralization, especially since Rochko is also CEO of Mastodon gGmbH, which runs mastodon.social.4 One of the justifications for this is that otherwise newcomers could wind up on an instance that doesn't block known actors and have a really horrible experience. Broad adoption of "worst-of-the-worst" blocklists would provide a decentralized way of addressing this concern.

For that matter, broader adoption of blocklists that silence or block mastodon.social could directly undercut Mastdon gGmbH's centralizing power in a way that was never tried (and is no longer feasible) with gmail. The FediPact – hundreds of instances agreeing to block the hell out of any Meta instances – is another obvious example of using a blocklist to counter dominance.

Today's fediverse relies on instance blocking and blocklists

It would be great if Mastodon and other fediverse software had other good tools for dealing with harassment and abuse to complement or replace instance-level blocking – and, ideally, reduce or even eliminate the need for blocklists.

But it doesn't, at least not yet.

That needs to change, and Steps towards a safer fediverse (part 5 of this series) discusses straightforward short-term improvements that could have big impact. Realistically, though, it's not going to change overnight – and a heck of a lot of people want alternatives to Twitter right now.

So despite the costs of instance-level blocking, and the potential harms of blocklists, they're the only currently-available solution for dealing with the hundreds of Nazi instances – and thousands of weakly-moderated instances, including some of the biggest, where moderators frequently don't take action on racist, anti-Semitic, anti-Muslim, etc content. As a result, today's fediverse is very reliant on them.

Steps towards better instance blocking and blocklists

"Notify users when relationships (follows, followers) are severed, due to a server block, display the list of impacted relationships, and have a button to restore them if the remote server is unblocked"

– Mastodon CTO Renaud Chaput, discussing the tentative roadmap for the upcoming Mastodon 4.3 release

Since the fediverse is likely to continue to rely on instance blocking and blocklists at least for a while, how to improve them? Mastodon 4.3's planned improvements to instance blocking are an important step. Improvements in the announcements feature (currently a "maybe" for 4.3) would also make it easier for admins to notify people about upcoming instance blocks. Hopefully other fediverse software will follow suit.

Another straightforward improvement along these lines would be an option to have new federation requests initially accepted in "limited" mode. By reducing exposure to racist content, this would likely reduce the need for blocking.

For blocklists themselves, one extremely important step is to let new instance admins know that they should consider initially blocking worst-of-the-worst instances (and their members are likely to get hit with a lot of abuse if they don't) and offering them some choices of blocklists to use as a starting point. This is especially important for friends-and-family instances which don't have paid admins or moderators. Hosting companies play a critical role here – especially if Mastodon, Lemmy, and other software platforms continue not to support this functionality directly (although obviously it would be better if they do!)

Since people from marginalized communities are likely to face the most harm from blocklist abuse, involvement of people from different marginalized communities in creating and reviewing blocklists is vital. One obvious short-term step is for blocklist curators – and instance admins whose blocklists are used as inputs to aggregated blocklists – to provide reasons instances are on the list, checking to see where receipts exist (and potentially providing access to them, at least in some circumstances), re-calibrating suspension vs. silencing in some cases, and so on. Independent reviews are likely to catch problems that a blocklist creator misses, and an audit trail of bias and accuracy reviews could make it much easier for instances to check whether a blocklist has known biases and mistakes before deploying it. Of course, this is a lot of work; asking marginalized people to do it as volunteers is relying on free labor, so who's going to pay for it?

A few other directions worth considering:

- More nuanced control over when to automatically apply blocklist updates could limit the damage from bugs or mistakes. For example, Rich Felker has suggested that blocklist management tools should have safeguards to prevent automated block actions from severing relationships without notice.

- Providing tags for the different reasons that instances are on on blocklists – or blocklists that focus on a single reason for blocking, like Seirdy's BirdSiteLive and bird.makeup blocklist – could make it much easier for admins to use blocklists as a starting point, for example by distinguishing between instances that are on a blocklist for racist and anti-trans harassment from instances that are there only because of CW or bot policies that the blocklist curator considers overly lax.

- Providing some access to receipts and an attribution trail of who has independently decided an instance should be blocked help admins and independent reviewers make better judgments about which blocklist entries they agree with. As discussed above, receipts are a complicated topic, and in many situations may be only partial and/or not broadly shareable5; but as Seirdy’s FediNuke.txt list shows, there are quite a few situations where they are likely to be available – and Seirdy notes that "I and most mods I’ve worked with also find receipt archives to be better resources than blocklists, though the two are not mutually exclusive."

- Shifting to a view of a blocklist as a collection of advisories or recommendations, and providing tools for instances to better analyze them, could help mitigate harm in situations where biases do occur. Emelia Smith's work in progress on FIRES (Fediverse Intelligence Recommendations & Replication Endpoint Server) is a valuable step in this direction.

- Learning from experiences with email blocking and IP blocking – and, where possible, building on infrastructure that already exists

What about blocklists for individuals (instead of instances)?

Good question. In situations where only a handful of people on an instance are behaving badly, blocking or muting them individually can limit the harm while still allowing connections with others on that instance. Most fediverse software provides the abilty to block and mute individuals, so it's kind of surprising that Twitter-like blocklists and tools like Block Party haven't emerged yet. It wouldn't surprise me if that changes in 2024.

Identifying bias in blocklists

One of the biggest concerns about blocklists is the possibility of systemic bias. Algorithmic systems tend to magnify biases, so "consensus" blocklists require extra scrutiny, but it can happen with manually curated lists as well.

Algorithmic audits (a structured approach to detecting biases and inaccuracies) are one good way to reduce risks – although again, who's going to pay for it Adding additional curation and review (by an intersectionally-diverse team of people from various marginalized perspectives) could also be helpful. Hrefna has some excellent suggestions as well, such as preprocessing inputs to add additional metadata and treating connected sources (for example blocklists from instances with shared moderators) as a single source. And there are a lot of algorithmic justice experts in the fediverse, so it's also worth exploring different anti-oppressive algorithms specifically designed to detect and reduce biases.

Of course, none of these approaches are magic bullets, and they’ve all got complexities of their own. When trying to analyze a blocklist's biases against trans people, Black people, Jews, and Muslims for example:

- There's no census of the demographics of instances in the fediverse, so it's not clear how to determine whether trans-led, Black-led instances, Jewish-led, or Muslim-led instances (or instances that host a lot of trans, Black, Jewish, and/or Muslim people) are overrepresented.

- Large instances like mastodon.social are sources of a lot of racism, anti-Semitism, and Islamophbia (etc). If a blocklist doesn't at least limit them, does that mean it's inherently biased against Black people? If a blocklist does limit or block them, then it's blocking the largest cis white led instances ... so does that mean it's statistically not biased against trans- and Black-led instances?

- Suppose the largest Jewish instance is a source of false reporting about pro-Palestinian posts. If it appears on the blocklist, is that evidence of anti-Jewish bias? After all, it's the largest Jewish instance! But if doesn't appear on a blocklist, is it a source of anti-Palestinian bias? And more generally, when looking at whether a blocklist is biased against Jews or Muslims, whose definitions of anti-Semitism get used?

- What about situations where differing norms (for example whether spamming #FediBlock as grounds for defederation, or whether certain jokes are racist or just good clean fun) disproportionately affect Black people and/or trans people?

- What about intersectional aspects such as biases against Black women or trans people of color? In a meta thread oh no oh god oh fuck, Seirdy notes that

"No over-representation of any marginalized group .... is a goal to strive for but impossible to strictly evaluate. For example: if a single instance for a marginalized group ruptures into multiple separate instances, how should that impact the group’s representation?"

Which brings us back to a point I made earlier:

"It would be great if Mastodon and other fediverse software had other good tools for dealing with harassment and abuse to complement instance-level blocking – and, ideally, reduce the need for blocklists."

To be continued!

The discussion continues in

- It’s possible to talk about The Bad Space without being racist or anti-trans – but it’s not as easy as it sounds

- Compare and contrast: Fediseer, FIRES, and The Bad Space

- Steps towards a safer fediverse

- A golden opportunity for the fediverse – and whatever comes next

Here's a sneak preview of the discussion of The Bad Space, a catalog of instances that can be used as the basis for various tools – including blocklists.

The Bad Space's web site at thebad.space provides a web interface that makes it easy to look up an instance to see whether concerns have been raised about its moderation, and an API (application programming interface) making the information available to software as well. The Bad Space currently has over 3300 entries – a bit over 12% of the 24,000+ instances in the fediverse....

The Bad Space is useful today, and has the potential to be the basis of other useful safety tools – for Mastodon and the rest of the fediverse, and potentially for other decentralized social networks as well. Many people did find ways to have productive discussions about The Bad Space without being racist or anti-trans, highlighting areas for improvement and potential future tools. So from that perspective quite a few people (including me) see it as off to a promising start.

Then again, opinions differ. For example, some of the posts I'll discuss in an upcomiung installment of this series (tentatively titled Racialized disinformation and misinformation: a fediverse case study) describe The Bad Space as "pure concentrated transphobia" that "people want to hardcode into new Mastodon installations" in a plot involving somebody who's very likely a "right wing troll" working with an "AI art foundation aiming to police Mastodon" as part of a "deliberate attempt to silence LGBTQ+ voices."

Alarming if true!

To see new installments as they're published, follow @thenexusofprivacy@infosec.exchange or subscribe to the Nexus of Privacy newsletter.

Notes

1 I'm using LGBTQIA2S+ as a shorthand for lesbian, gay, gender non-conforming, genderqueer, bi, trans, queer, intersex, asexual, agender, two-sprit, and others who are not straight, cis, and heteronormative. Julia Serrano's trans, gender, sexuality, and activism glossary has definitions for most of terms, and discusses the tensions between ever-growing and always incomplete acronyms and more abstract terms like "gender and sexual minorities". OACAS Library Guides' Two-spirit identities page goes into more detail on this often-overlooked intersectional aspect of non-cis identity.

2 Suicide-baiting: telling or encouraging somebody to kill themselves.

3 Some instances share moderators, have moderators who are friends with each other, or make moderation decisions jointly. And even if there's no connection between instances, if somebody announces a blocking decision to #FediBlock – or an instance shows up on a widely-used blocklist – other instances are likely to block as well. Of course they should verify the claims before deciding to block; but in situations where that doesn't happen and they just take the original poster's word for it, then the additional protection of requiring multiple actions is illusory.

3.5 A bug leads to messy discussions, some of which are productive discusses a related situation where a software bug led to a high-profile LGBT-focused instance briefly mistakenly appearing on The Bad Space, an instance catalog that similarly aggregates instance blocklists.

4 Update, January 2025: November 2024's A faux “Eternal September” turns into flatness discusses Rochko's changing the default to mastodon.social in the sections on Why not help people choose an instance that's a good fit? and But no.

5 Update, Januaray 2025: The Laelaps anti-Zoophilia labeler on Bluesky uses Github to track its public evidence.

Update log

Ongoing: fixing typos and confusing wording, adding new links, other minor changes

November 17, 2024: changed title to make it explicit that this is about the ActivityPub Fediverse

January 7, 2025: update to include IFTAS' CARIAD, changes in Oliphant blocklists, add footnotes 3.5, 4 and 5, quotes from Seirdy's a meta thread oh no oh god oh fuck, begin working on notes for Bluesky/AT Proto