Automated Systems and Discrimination: Comments at FTC Public Forum for Commercial Surveillance and Data Security

An extended remix of my two-minute public comments

The September 8 FTC Public Forum on Commercial Surveillance and Data Security included time for public comments at the end. Here's an extended version of what I said.

I’m Jon Pincus, founder of the Nexus of Privacy Newsletter, where I write about on the connections between technology, policy, and justice. As a long-time privacy advocate, I greatly appreciate your attention to commercial surveillance. My career includes founding a successful software engineering startup; General Manager of Compteitive Strategy at Microsoft; and co-chairing the ACM Computers, Freedom, and Privacy Conference. Last year I was a member of the Washington state Automated Decision-making Systems Workgroup, and my comments today focus on discrimination and automated systems.

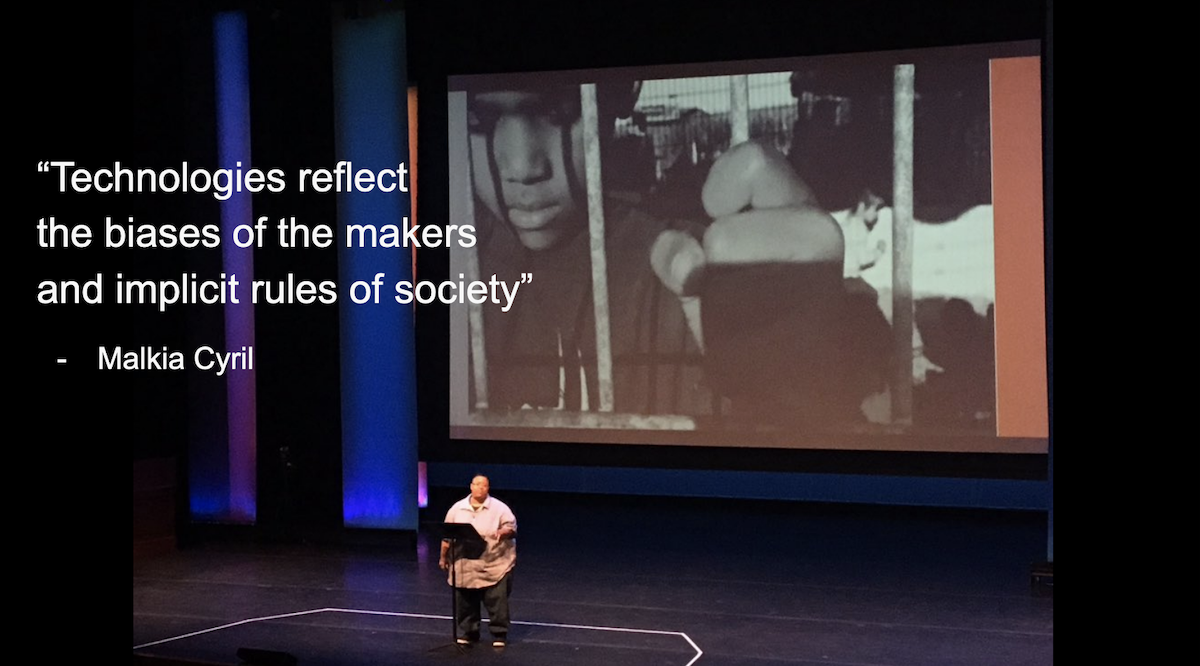

Technology can be liberating … but it can also reinforce and magnify existing power imbalances and patterns of discrimination. As documented by researchers like Dr. Safiya Noble in Algorithms of Oppression and Dr. Joy Buolamwini, Timnit Gebru, and Inioluwa Deborah Raji in the Gender Shades project, today’s algorithmic systems are error-prone and the datasets they’re trained on have significant biases.

Like other forms of surveillance, the harms are usually much greater on the historically underserved communities the FTC has committed to protecting. Who gets wrongfully arrested as a result of facial recognition errors? Nijeer Parks, Robert WIlliams, and Michael Oliver … Black people!

Regulation is clearly needed, and it needs to be designed to protect the people who are most at risk.

The Algorithmic Justice League’s four principles are a good starting point:

- affirmative consent

- meaningful transparency

- continuous oversight and accountability, and

- actionable critique focused on shifting industry practices among those creating and commercializing today’s systems.

AJL founder Buolamwini, research collaborator Raji, and Director Of Research & Design Sasha Costanza-Chock (known for their work on Design Justice) recently collaborated on Who audits the Auditors: Recommendations from a field scan of the algorithmic auditing ecosyystem, the first comprehensive field scan of the artificial intelligence audit ecosystem.

The policy recommendations in Who Audits the Auditors highlight key considerations in several specific areas where FTC rulemaking could have a major impact.

- Require the owners and operators of AI systems to engage in independent algorithmic audits against clearly defined standards

- Notify individuals when they are subject to algorithmic decision-making systems

- Mandate disclosure of key components of audit findings for peer review

- Consider real-world harm in the audit process, including through standardized harm incident reporting and response mechanisms

- Directly involve the stakeholders most likely to be harmed by AI systems

in the algorithmic audit process - Formalize evaluation and, potentially, accreditation of algorithmic auditors.

And the details of the rulemaking matter a lot. Too often, well-intended regulation has weaknesses that commercial surveillance companies, with their hundreds of lawyers, can easily exploit. Looking at proposals through an algorithmic justice lens can highlight where they fall short.

For example, here's how the proposed American Data Privacy and Protection Act (ADPPA) consumer privacy bill stacks up against AJL's recommendations:

- ADPPA doesn't require independent auditing, instead allowing companies like Facebook to do their own algorithmic impact assessments. And government contractors acting as service providers for ICE and law enforcement don't even have to do algorithmic impact assessments!

Update, September 13: Color of Change's Black Tech Agenda notes "By forcing companies to undergo independent audits, tech companies can address discrimination in their decision-making and repair the harm algorithmic that bias has done to Black communities regarding equitable access to housing, health care, employment, education, credit, and insurance." - ADPPA doesn’t require affirmative consent to being profiled – or even offer the opportunity to opt out.

- ADPPA doesn’t mandate any public disclosure of its algorithmic impact assessments – not even summaries or key components

- ADPPA doesn't have any requirement for including real-world harms – or even measuring the impact.

- ADPPA doesn't have any requirement at all to involve external stakeholders in the assessment process – let alone directly involving the stakeholders most likely to be harmed by AI system.

- ADPPA allows anybody at a company to do an algorithmic impact assessment – and it's not even clear whether it allows the FTC rulemaking authority for potential evaluation and accreditation of assessors or auditors

ADPPA’s algorithmic impact assessments are too weak to protect civil rights -- but it’s not too late to strengthen them goes into more detail.

More positively, other regulation in the US and elsewhere highlights that these issues can be addressed. Rep. Yvette Clarke's Algorithmic Accountability Act of 2022, for example, requires companies performing AIAs "to the extent possible, to meaningfully consult (including through participatory design, independent auditing, or soliciting or incorporating feedback) with relevant internal stakeholders (such as employees, ethics teams, and responsible technology teams) and independent external stakeholders (such as representatives of and advocates for impacted groups, civil society and advocates, and technology experts) as frequently as necessary."

AJL's recommendation of directly involving the stakeholders most likely to be harmed by AI systems also applies to the process of creating regulations. In Hiding OUT: A Case for Queer Experiences Informing Data Privacy Laws, A. Prince Albert III (Policy Counsel at Public Knowledge) suggests using queer experiences as an analytical tool to test whether proposed a privacy regulation protects people’s privacy. Stress-testing privacy legislation with a queer lens is an example of how effective this approach can be at highlighting needed improvements. It's also vital to look at how proposed regulations affect pregnant people, rape and incest survivors, immigrants, non-Christians, disabled people, Black and Indigenous people, unhoused people, and others who are being harmed today by commercial surveillance.

So I implore you, as you continue the rulemaking process, please make sure that the historically underserved communities most harmed by commercial surveillance are at the table – and being listened to.