It's possible to talk about The Bad Space without being racist or anti-trans – but it's not as easy as it sounds

Part 3 of "Golden opportunities for the fediverse – and whatever comes next"

The title applies to me as well, and I've probably said some things badly in this article. Apologies in advance. I'll update the post as problems surface.

Join the discussion in the fediverse on infosec.exchange!

The problem The Bad Space is focusing on is certainly a critical one. As I said in Mastodon and today’s fediverse are unsafe by design and unsafe by default (the first post in this series)

"The 1.25 million active users in today's fediverse are spread across more than 20,000 instances (aka servers) running a wide variety of software. On instances with active and skilled moderators and admins it can be a great experience. But not all instances are like that. Some are bad instances, filled with nazis, racists, misogynists, anti-LGBTQIA2S+ haters1, anti-Semites, Islamophobes, and/or harassers. And even on the vast majority of instances whose policies prohibit racism (etc.), relatively few of the moderators in today's fediverse have much experience with anti-racist or intersectional moderation – so very often racism (etc.) is ignored or tolerated when it inevitably happens."

As Lady pointed out in November 2022, this isn't a new problem.

"bad instances have always existed and every good thing about the fediverse today was hard-fought and hard-won. making it better will mean more work, fighting, and winning"

Over the years, innovations like instance-level blocking and #fediblock have helped make progress. But as the widespread racist and anti-trans bigotry and harassment in the messy discussions of The Bad Space over the last few months highlights, there's still a lot more work needed – and a lot more fighting.

Ro's certainly got the right background to work on fediverse safety tools. As well as coding skills and years of experience in the fediverse, he and Artist Marcia X were admins of Play Vicious, which as one of the few Black-led instances in the fediverse was the target of vicious harassment until it shut down in 2020. And The Bad Space's approach of designing from the perspective of marginalized communities is a great path to creating a fediverse that's safer and more appealing for everybody (well except for harassers, racists, fascists, and terfs – but that's a good thing). As Afsenah Rigot says in Design From the Margins

"After all, when your most at-risk and disenfranchised are covered by your product, we are all covered."

The Bad Space is still at an early stage, and like all early-stage software has bugs and limitations. Many of the sites listed don't have descriptions; some of the descriptions may be out-of-date; there's no obvious appeals process for sites that think they shouldn't be listed. A mid-September bug led to some instances being listed by mistake, and the user interface at the time didn't provide any information about whether instances were limited (aka silenced) or suspended (aka defederated) by other instances. The bug's been fixed, the UI's been improved ... but of course there may well be other false positives, bugs, etc etc etc. It's software!

Still, The Bad Space is useful today, and has the potential to be the basis of other useful safety tools – for Mastodon and the rest of the fediverse,2 and potentially for other decentralized social networks as well. Many people did find ways to have productive discussions about The Bad Space without being racist or anti-trans, highlighting areas for improvement and potential future tools. So from that perspective quite a few people (including me) see it as off to a promising start.

Then again, opinions differ. For example, some of the posts I'll discuss in an upcomiung installment of this series (tentatively titled Racialized disinformation and misinformation: a fediverse case study) describe The Bad Space as "pure concentrated transphobia" that "people want to hardcode into new Mastodon installations" in a plot involving somebody who's very likely a "right wing troll" working with an "AI art foundation aiming to police Mastodon" as part of a "deliberate attempt to silence LGBTQ+ voices."

Alarming if true!

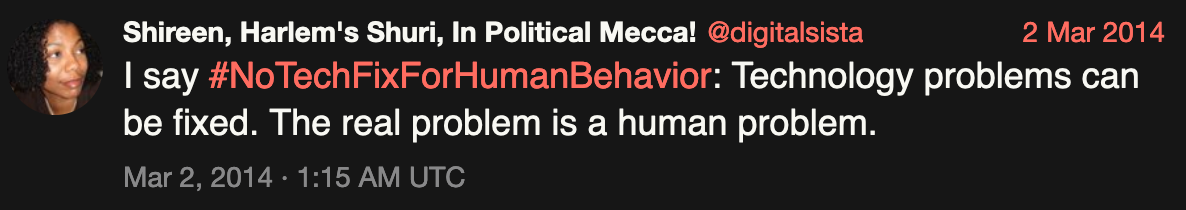

"[D]isinformation in the current age is highly sophisticated in terms of how effectively a kernel of truth can be twisted, exaggerated, and then used to amplify and spread lies."

– Shireen Mitchell of Stop Online Violence Against Women, in Disinformation: A Racist Tactic, from Slave Revolts to Elections

Another way to look at it ...

At the same time, the messy discussions around The Bad Space are also a case study of the resistance in today's fediverse to technology created by a Black person (working with a diverse team of collaborators that includes trans and queer people) that allows people to better protect themselves.3

Many people misleadingly describe reactions to The Bad Space in terms of tensions between trans people and Black people – and coincidentally enough that's just how just the pair of wildly inaccurate but very popular neocities posts I'll discuss in an upcoming installment on racialized disinformation and misinformation frames it, too.4 That's unfortunate in many ways. For one thing, Black trans people exist. Also, the "Black vs. trans" framing ignores the differences between trans femmes, trans mascs, and agender people. And the alternate framing of tensions between white trans femmes and Black people is no better, erasing trans people who are neither white nor Black,5 multiracial trans people, white agender people, white trans mascs (and many others) while ignoring the impact of cis white people, colorism, ableism, etc etc etc.

Besides, none of these communities are monolithic. There's a range of opinion on The Bad Space in Black communities, trans communities, and different intersectional perspectives (white trans femmes, disabled trans people) – a continuum between some people helping create it and/or actively supporting it, others strongly opposing it, with many somewhere in between.

More positively though, these dynamics – and the racist and anti-trans language in the discussions about The Bad Space – also make it a good case study of how cis white supremacy creates wedges (and the appearance of wedges) between and within marginalized groups – and erases people at the intersections and falling through. And with luck it'll also turn out to be a larger case study about how anti-racist and pro-LGBTAIQ2S+ people work together as part of an intersectional coalition to create something that's very different from today's fediverse.

The fediverse's technology problems do need to be fixed, and I'll return to that in an upcoming installment in this series. But if the fediverse – or a fork of today's fediverse – is going to move forward in an anti-racist and pro-LGBTAIQ2S+ direction, understanding these dynamics are vital. And whether or not the fediverse moves forward and fixes its technology problems ... well, understanding these dynamics is vital for whatever comes next, because these same problems occur on every social network platform.

So there's a lot going on here, which means this is going to be a long post. Here's an outline:

- The Bad Space and FSEP

- A bug leads to messy discussions, some of which are productive

- Nobody's perfect in situations like this

- These discussions aren't occurring in a vacuum

- Also: Black trans, queer, and non-binary people exist

The Bad Space and FSEP

"The Bad Space is a collaboration of instances committed to actively moderating against racism, sexism, heterosexism, transphobia, ableism, casteism, or religion."

– The Bad Space's About page

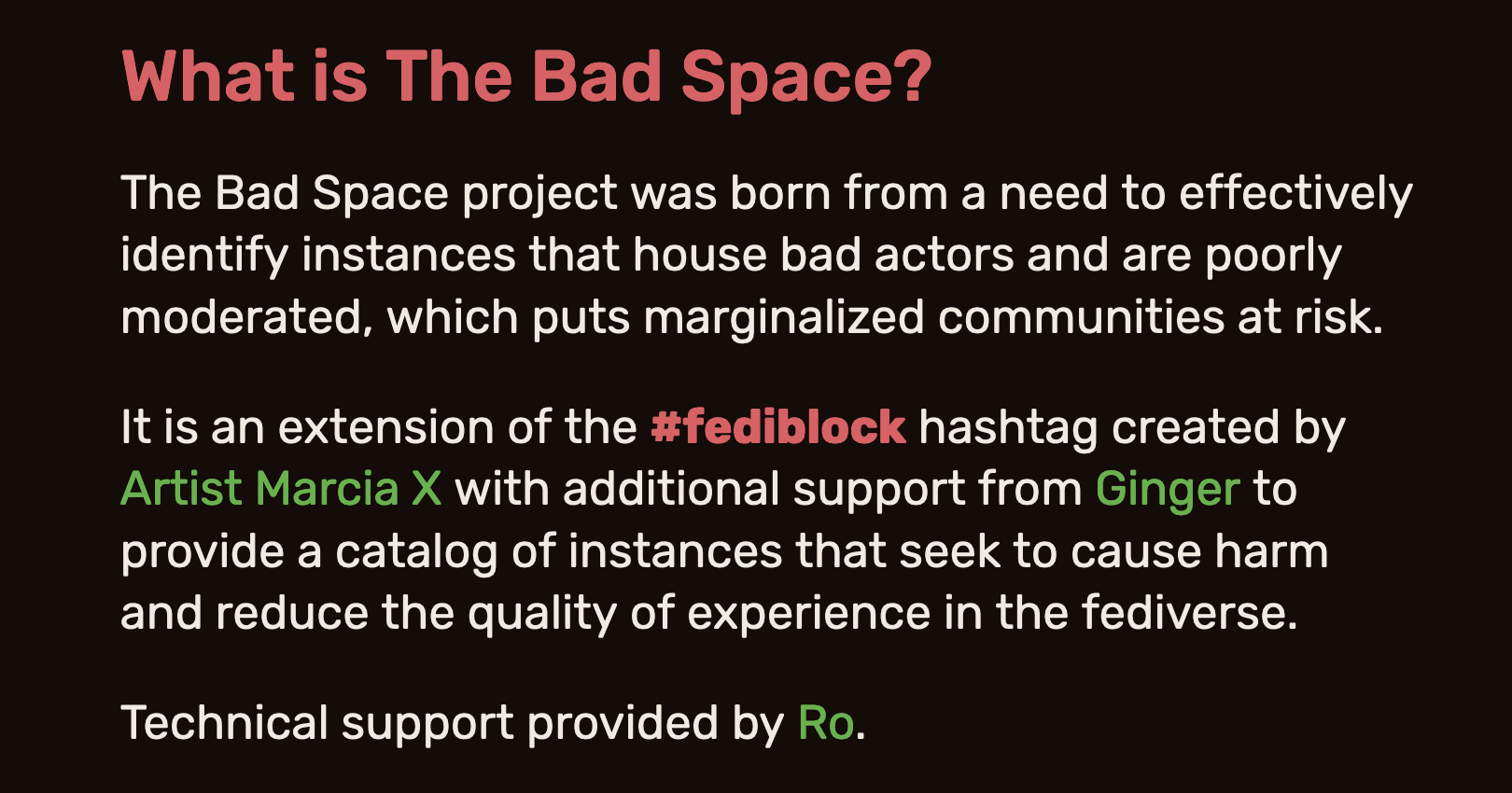

The work-in-progress version of The Bad Space's web site (currently at tweaking.thebad.space provides a web interface that makes it easy to look up an instance to see whether concerns have been raised about its moderation, and an API (application programming interface) making the information available to software as well. The Bad Space's instance catalog currently has over 3300 entries – roughly 14% of the 24,000+ instances in today's fediverse. Entries are added using the blocklists of multiple sources as input. Here's how the about page describes it:

"These instances have permitted The Bad Space to read their respective blocklists to create a composite directory of sites tagged for the behavior above that can be searched and, through a public API, can be integrated into external services."

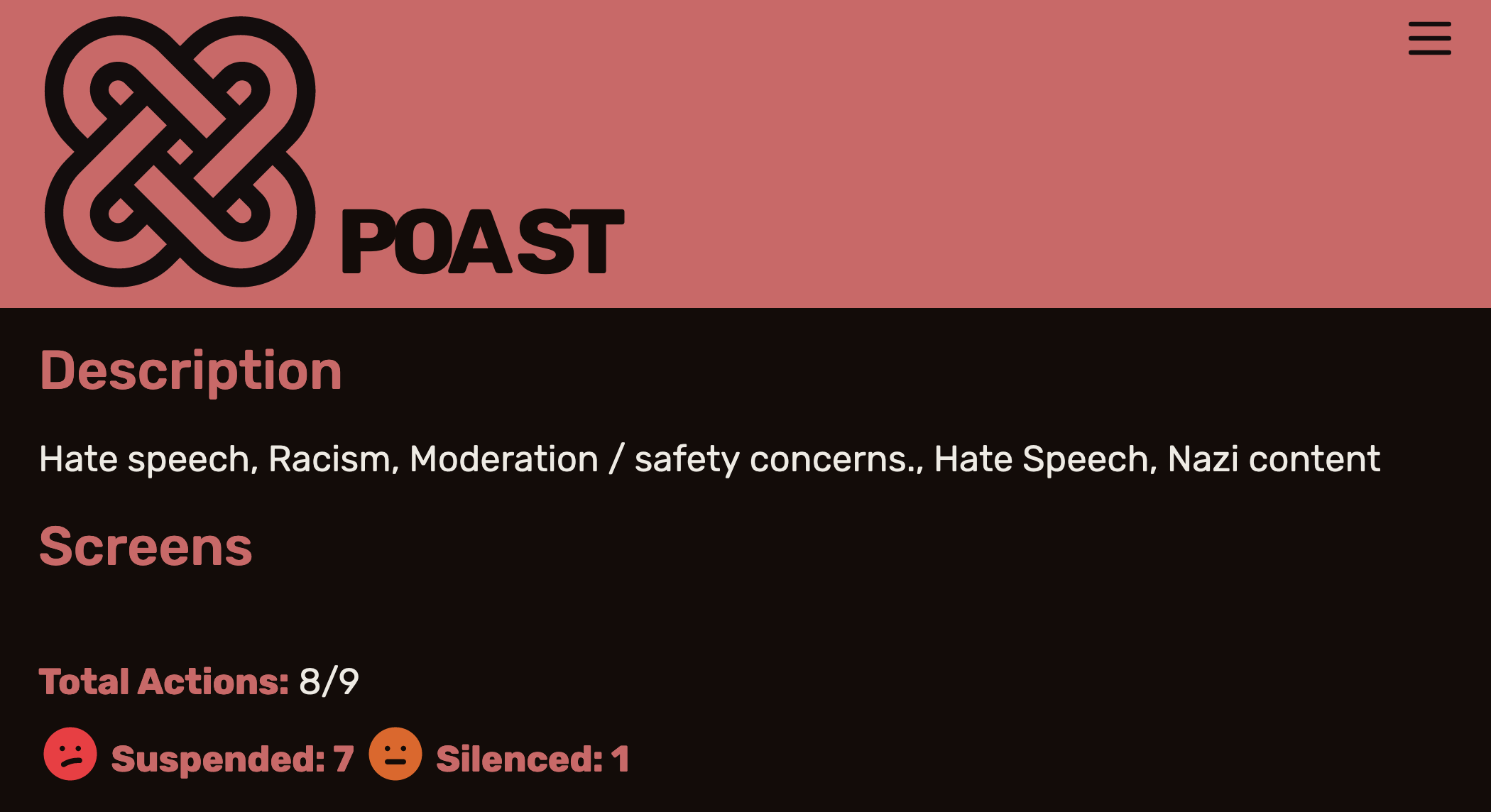

An instance appears on The Bad Space if it's on the blocklists of at least two of the sources. The web interface and API make it easy to see how many sources have silenced or defederated an instance. Other information potentially available for each instance includes a description and links to screens for receipts; screens are not currently available on the public site, and only a subset of instances currently have descriptions. Here's what the page for one well-known bad actor looks like.

The Bad Space also provides downloadable "heat rating" files, showing instances that have been acted on by some percentage of the sources. As of late November October, the 90% heat rating lists 131 instances, and has descriptions for the vast majority. The 50% heat rating lists 650+, and the 20% heat rating (2 or more) has over 1600.

FSEP

The Federation Safety Enhancement Project (FSEP) requirements document (authored by Ro, and funded by Nivenly Foundation via a direct donation Nivenly board member Mekka Okereke) provides a couple of examples of how The Bad Space could be leveraged to improve safety on the fediverse.8 One is a tool that gives individual users the ability to vet incoming connection requests to validate that they are not from problematic sites.

This matters from a safety perspective because if somebody follows you they can see your followers-only posts. People who value their privacy – and/or are the likely targets of harassment or hate speech and don't want to let just anybody follow them – can turn off the "Automatically accept new followers" option in their profile if they're using the web interface (although Mastodon's default app doesn't allow this, another great example of Mastodon not giving people tools to protect each other). FSEP goes further by providing users with information about the instance the follow request is coming from – useful information if they're coming from an instance that has a track record of bad behavior.

The other tools discussed in FSEP help admins manage blocklists, and address weaknesses in current fediverse support for blocklists.9 As Blocklists in the fediverse discusses at length, blocklists can have significant downsides; but in the absence of other good tools for dealing with harassment and abuse, the fediverse currently relies on them. FSEP's "following UI" is a great example of the kind of tool that complements blocklists, but the need isn't likely to go away anytime soon.

While FSEP's proposed design allows blocklists from arbitrary sources, the implementation plan proposed initially using information from The Bad Space's catalog to fill that role in the minimum viable product (MVP). But sometimes products never get beyond the MVP. So what would happens if FSEP gets implemented, and then adopted as a default by the entire fediverse, and never gets to the stage of adding another source for blocklists?

Realistically, of course, there's no chance this will happen. The Bad Space includes mastodon.social on its default blocklist – and mastodon.social is run by Mastodon gGmbH, who also maintains the Mastodon code base. Mastodon's not going to adopt a default blocklist that blocks mastodon.social, and Mastodon is currently over 80% of the fediverse. So The Bad Space isn't going to get adopted as a default by the entire fediverse.

But what if it did?????????

A bug leads to messy discussions, some of which are productive

The alpha version of The Bad Space had been available for a while (I remember looking up an instance on it early in the summer after an unpleasant interaction with a racist user), and the conversations about it were relatively positive. The FSEP requirements doc was published in mid-August, and got some feedback, but there wasn't a lot of broad discussion of it. That all changed after the mid-September bug, which led to dozens of sites temporarily getting listed on The Bad Space by mistake – including girlcock.club (an instance run by trans women for trans folk) and tech.lgbt.

Of course when it was first noticed nobody knew it was a bug. And other trans- and queer-friendly sites also appeared on the The Bad Space. Especially given the ways Twitter blocklists had impacted the trans community, it's not surprising that this very quickly led to a lot of discussion.

Even though nobody was using The Bad Space as a blocklist at the time, the bug certainly highlights the potential risks of using automatically-generated blocklists without double-checking. If an instance had been using "every instance listed on The Bad Space" as a blocklist, then thousands of connections would have been severed without notice – and instance-level defederation on Mastodon currently doesn't allow connections to be re-established if the defederation happens by mistake, so it would have been hard to recover.

Sometimes, messiness can be productive

Ro quickly acknowledged the bug and started working on a fix. A helpful admin quickly connected with the source that had limited girlcock.club because of "unfortunate branding" and resolved the issue – and the source was removed from The Bad Space's "trusted source" list. A week later Ro deployed a new version of the code that fixed the bug, and soon after that implemented UI improvements that provide additional information about how many of the sources have take action against a specific instance and which actions have been taken.

From a software engineering perspective, this kind of messiness can be productive. Many people found ways to raise questions about, criticisms of, and suggestions for improvements to FSEP and The Bad Space without saying racist or anti-trans things. dclements' detailed questions on github are an excellent example, and I saw lots of other good discussion in the fediverse as well.

Discussions about bugs can highlight patterns10 and point to opportunities for improvements. For example, bugs aren't the only reason that entries are likely to appear on blocklists by mistake; other blocklists have had similar problems. What's a good appeal process? How to reduce the impact of mistakes? In a github discussion, Rich Felker suggested that blocklist management tools should have safeguards to prevent automated block actions from severing relationships without notice. If something like that is implemented, it'll help people using any blocklist.

But as interesting as the software engineering aspects are to some of us, much more of the discussion focused on the question of whether the presence of multiple queer and trans instances on The Bad Space reflect bias – or transphobia. As Identifying bias in blocklists discusses, these kinds of questions are important to take into account on any blocklist – just as biases against Black, Indigenous, Muslim, Jewish, and disabled people need to be considered (as well as intersectional biases). It's a hard question to answer!

"When trying to analyze a blocklist for bias against trans people, for example, there's no census of the demographics of instances in the fediverse, so it's not clear how to determine whether trans-led instances are overrepresented. The outsized role of large instances like mastodon.social that are sources of a lot of racism (etc) is another example; if a blocklist doesn't block mastodon.social, does that mean it's inherently biased against Black people? When looking at whether a blocklist is biased against Jews or Muslims, whose definitions of anti-Semitism get used? What about situations where differing norms (for example whether spamming #FediBlock as grounds for defederation, or whether certain jokes are racist or just good clean fun) disproportionately affect BIPOC and/or trans people?

In some alternate universe this too could have been a mostly productively messy discussion. It really is a hard problem – and not just for blocklists, for recommendation systems in general – and the fediverse is home to experts in algorithmic analysis and bias like Timnit Gebru and Alex Hanna of Distributed AI Research Center, Damien P. Williams, Emily Bender as well as lots people with a lot of first-hand experience.

But alas, in this universe today's fediverse is not particularly good at having discussions like this.

Sometimes messiness is just messy

It might still turn out that this part of this discussion becomes productive ... for now let's just say the jury's out. It sure is messy though. For example:

- racists (including folks who have been harassing Ro and other Black people for years) taking the opportunity to harass Ro and other Black people

- a post that tech.lgbt's Retrospective On thebad.space Situation describes as being made in a state of "unfettered panic" left out "various critical but obscure details" and "unwittingly provided additional credibility" for the racist harassment campaign.

- waves of anti-Black and anti-trans language – and racialized disinformation and misinformation (including the wildly misleading anonymous and pseudonymous post discussed in footnote 4) swept though the fediverse.

- a distributed denial of service (DDOS) attack targeting Ro's site and The Bad Space)

- instances (including some led by trans and queer people) who saw The Bad Space as a self-defense tool for Black people and Ro and other Black people as the target of racist harassment defederating from instances where moderators and admins allowed, participated in, or enabled attacks – which people who saw The Bad Space as "pure concentrated transphobia" took as unjust and an attempt to isolate trans and queer people.

It still isn't clear who was behind the DDOS and anonymous and pseudonymous racialized disinfo. For the DDOS, my guess is that it was some of the people who harassed Play Vicious in the past and/or nazis, terfs, and white supremacists trying to drive wedges between Black people and trans people and block an effort to reduce racism in the fediverse. With the DDOS attack, for example, they were probably hoping that Black supporters of The Bad Space would blame trans critics, and the trans critics would blame Ro or his supporters for a "false flag" operation to garner sympathy for his cause. But who knows, maybe it really was over-zealous critics – or channers doing it for the lulz.

Nobody's perfect in situations like this

In fraught and emotional situations like this, it's very easy for people to say things that echo racist and/or transphobic stereotypes and dogwhistles, come from a place of privilege and entitlement, embed double standards, or reflect underlying racist, anti-trans, and transmisogynistic assumptions that so many of us (certainly including me!) have absorbed without realizing.

The anti-trans language I saw came from a variety of sources, from opportunistic bigots as well to well-intentioned people expressing opinions about The Bad Space in anti-trans ways – supporters or critics of The Bad Space making statements attacking or erasing trans critics or supporters, or trans people in general. Calling white trans people out on their racism isn't anti-trans; using dogwhistles, stereotypes, or other problematic language while doing so is, and so is intentionally misgendering them. Suggesting that trans supporters of The Bad Space are ignoring the potential harms to trans people isn't anti-trans; suggesting that trans people who support The Bad Space "aren't really trans" (or implying that no trans people support The Bad Space) is, and so is intentionally misgendering or calling somebody a "theyfab".

By contrast, the anti-Black language I saw was primarily from people opposing The Bad Space (or impersonating a supporter) – although opportunistic racist bigots got involved as well. Some was blatant, although not necessarily intentional: stereotypes, amplifying false accusations, dogwhistles and veiled slurs. Another common form was criticisms of The Bad Space that don't acknowledge (or just pay lip service to) Black people's legitimate need to protect themselves on the fediverse, reflecting a racist societal assumption that Black people's safety has less value than white people's comfort. Black people (including Black trans, queer, and non-binary people) have been the target of vicious harassment for years on Mastodon and the fediverse, so no matter the intent, accusations that Ro or The Bad Space are causing division and hate ignore the fediverse's long history of whiteness and racism – and blame a Black person or Black-led project.

And some people managed to be anti-Black and anti-trans simultaneously. One especially clear example: a false accusation about a Black trans person, inaccurately claiming they had gotten a white person fired for criticizing The Bad Space.11 A false accusation against a Black person is anti-Black; a false accusation against a trans person is anti-trans; a false accusation against a Black trans person is both.

Just as in past waves of intense anti-Blackness in the fediverse, one incident built on another. The false accusation occurred after weeks of anti-Black harassment by multiple people on the harasser's instance (with the admin refusing to take action). When the Black trans person who had been falsely accused made a bluntly worded post warning the harassers to knock it off or there would be consequences, the harassers described it as an unprovoked death threat – and demanded that other Black and trans people criticize the Black trans person who was trying to end the harassment. It's almost like they think Black trans people don't have the right to protect themselves! And Black people are stereotypically associated with danger and violence, so it's not surprising many who only saw the decontextualized claim of a "death threat" assumed it was the whole story and proceeded to amplify the anti-Black, anti-trans framing.

Nobody's perfect in situations like this, and while some people making unintentionally unfortunate anti-trans or anti-Black statements acknowledged the problems and apologized, you can't unring a bell. And many didn't acknowledge the problems or apologize, or apologized but continued making unfortunate statements, at which point you really have to question just how "unintentional" they were.

These discussions aren't occurring in a vacuum

"Until we admit that white queers were part of the initial Mastodon "HOA" squad that helped run the initial Black Twitter diaspora off the site - and are also complicit in the abuse targeted at the person in question here - we're not going to make real progress."

– Dana Fried, September 13

The fediverse has a long history of racism, and of marginalizing trans people; see Dr. Johnathan Flowers' The Whiteness of Mastodon or my Dogpiling, weaponized content warning discourse, and a fig leaf for mundane white supremacy, The patterns continue ..., and Ongoing contributions – often without credit for some of it. And some of the racism has come from white queer and trans people. Margaret KIBI's The Beginnings, for example, describes how soon after content warnings (CWs) were first introduced in 2017

"this community standard was weaponized, as white, trans users—who, for the record, posted un‐CWed trans shit in their timeline all the time—started taking it to the mentions of people of colour whenever the subject of race came up."

And the fediverse is still dealing with the aftermath of the Play Vicious episode. Here's how weirder.earth's Goodbye Playvicious.social statement from early 2021 describes it:

"Playvicious was harrassed off the Fediverse.... The Fediverse is anti-Black. That doesn't mean every single person in it is intentionally anti-Black, but the structure of the Fediverse works in a way that harrassment can go on and on and it is often not visible to people who aren't at the receiving end of it. People whom "everyone likes" get away with a ton of stuff before finally *some* instances will isolate their circles. Always not all. And a lot of harrassment is going on behind the scenes, too."

As Artist Marcia X says in Ecosystems of Abuse,

“Misgendering, white women/femmes making comments to Black men that make them uncomfortable, the politics of white passing people as they engage with darker folks, and slurs as they are used intracommunally—these are not easy topics but at some point, they do become necessary to discuss.”

Also: Black trans, queer, and non-binary people exist

"[T]he Black vs. Trans argument really pisses me off because it erases Black Trans people like me, but is also based on the assumption of Trans whiteness"

– Terra Kestrel, September 12

Black trans, queer, and non-binary people are at the intersection of the fediverse's long history of racism and of marginalizing trans people – as well as marginalization within queer communities and in society as a whole. One way this marginalization manifests itself is by erasure. How many of the people criticizing The Bad Space as anti-trans, or framing the discussions is pitting Black people's need to protect themselves as in tension with trans people's concern about being targeted, even acknowledged the existence of Black trans people?

"I think if you included Black trans folk and anti-racist white trans folk in your list of "trans folk to listen to," you'd have a more complete picture, and you'd understand why there are so few Black people on the Fediverse."

– Mekka Okereke, September 13

Black trans, queer, and non-binary people have lived experience with anti-Blackness as well as transphobia. They also have lived experience with intersectional and intra-community oppressions: racism and transphobia in LGBTQIA2S+ communities, transphobia and colorism in Black communities. And they're the ones who are most affected by transphobia in society. For example, trans people in general face a significantly higher risk of violence – and over 50% of the trans people killed each year are Black. Trans people on average don't live as long as cis people – and Black trans and non-binary people are significantly more likely to die than White trans and non-binary people.

Of course, no community is monolithic, and there are a range of opinions on Ro and The Bad Space from Black trans, queer, and non-binary people. That said, most if not all the Black trans, queer, and non-binary people I've seen expressing opinions publicly have expressed support for Ro and his efforts with The Bad Space, while also acknowledging the need for improvements.

"To be completely honest, any discussions about transphobia and the recent meta?

That has to come from Black trans and nonbinary folks."

– TakeV, September 16

To be continued!

The discussion continues in

- Compare and contrast: Fediseer, FIRES, and The Bad Space

- Steps to a safer fediverse

- Racialized disinformation and misinformation: a fediverse case study

- A golden opportunity for the fediverse – and whatever comes next

Some sneak previews:

- Racialized misinformation is false or misleading communication or propaganda – typically about racial or social justice issues – that deceives or manipulates people and sustains white supremacy.

- Racialized disinformation is racialized misinformation that's intentionally false or misleading, or done for the purpose of achieving profit or political gain.

Key tactics used in racialized disinfo also show up in racialized misinfo.

...

Fediseer, like The Bad Space, is a catalog of instances that can be accessed via a web user interface or programmatically via an API. It started with a focus on spam, which is a huge problem on Lemmy. But spam is far from the only huge moderation problem on Lemmy, so Fediseer's general approval/disapproval mechanism (and the availability of scripts that let it automatically update lists of blocked instances) means that many instances use it to help blocking sites with CSAM (child sexual abuse material) and as a basis for blocklists.

...

But these tools are only the start of what's needed to change the dynamic more completely. To start with, fediverse developers, "influencers," admins, and funders need to start prioritizing investments in safety. The IFTAS Fediverse Moderator Needs Assessment Results highlight one good place to start: provide resources for anti-racist and intersectional moderation. Sharing and distilling "positive deviance" examples of instances that do a good job, documentation of best practices, training, mentoring, templates for policies and process, workshops, and cross-instance teams of expert moderators who can provide help in tricky situations. Resources developed and delivered with funded involvement of multiply-marginalized people who are the targets of so much of this harassment today are likely to be the most effective.

To see new installments as they're published, follow @thenexusofprivacy@infosec.exchange or subscribe to the Nexus of Privacy newsletter.

Notes

1 I'm using LGBTQIA2S+ as a shorthand for lesbian, gay, gender non-conforming, genderqueer, bi, trans, queer, intersex, asexual, agender, two-sprit, and others who are not straight, cis, and heteronormative. Julia Serrano's trans, gender, sexuality, and activism glossary has definitions for most of terms, and discusses the tensions between ever-growing and always incomplete acronyms and more abstract terms like "gender and sexual minorities". OACAS Library Guides' Two-spirit identities page goes into more detail on this often-overlooked intersectional aspect of non-cis identity.

2 Today, Mastodon has by far the largest installed base in the fediverse but (as I'll discuss in the upcoming Mastodon: the partial history continues) is likely to lose its dominant position. Other platforms like Streams, Akkoma, Bonfire, and GoToSocial have devoted more thought to privacy and other aspects of safety, and big players are getting involved – like WordPress, who recently released now offers official support for ActivityPub.

That said, The Bad Space and FSEP also fit in well with the architectural vision new Mastodon CTO Renaud Chaput sketches in Evolving Mastodon’s Trust & Safety Features, so will be useful for instances running Mastodon as well. Mastodon – or a fork – has an opportunity to complement these tools by with giving individuals more ability to protect themselves, shifting to a "safety by default" philosophy, adding quote boosts, and integrating ideas from other decentralized networks like BlackSky.

3 From this perspective, the reaction to The Bad Space is an interesting complement to the multi-year history of Mastodon's refusal to support local-only posts, a safety tool created by trans and queer people that allows trans and queer people (and everybody else) to better protect themselves. Hey wait a second, I'm noticing a pattern here! Does Mastodon really prioritize stopping harassment? has more -- although note that the Glitch and Hometown forks of Mastodon, and most other fediverse platforms, do support local-only posts.

4 OK it's not really coincidental. As Disinfo Defense League points out, using wedge issues to divide groups is a common tactic of racialized disinformation. The neocities posts also used other common disinfo tactics. For example, an anonymous post that purported to analyze the accuracy of The Bad Space had a kernel of truth in that it correctly identified a couple of errors, misleading omissions in failing to note that the errors had already been fixed by the time the post was published, misleading omissions (and sometimes flat-out lies) about the reason that instances appeared there, a misleading claim that the data showed evidence of bias in The Bad Space (as opposed to the reality that there were so many mistakes in the data that no conclusion could be drawn), and misleading claim that this was an intentional attempt to target trans people, anarchists, and ActivityPub implementers. And then the next neocities post, a pseudonymous hit piece, simply cited the "evidence" of the previous post as if it were proof and made up wild claims about Nivenly Foundation -- which is not, in reality, an "AI Art Foundation" 🤣 On the one hand it's ridiculous, but it fits in with many people's unexamined racist biases, so they often believe it without even bothering to check.

5 Thanks to Octavia con Amore for pointing out to me the erasure of trans people who are neither white nor Black from so many of these dicussions.

6 There is currently no footnote #6 or #7. Ghost, the blogging/newsletter software I'm using, doesn't have a good solution for auto-numbering footnotes unless you write your whole post in Markdown, and it's a huge pain to manually edit them, so my footnotes often wind up very strange-looking.

8 Nivenly's response in the github Discussion of the FSEP proposal, from mid-September, has a lot of clarifications and context on FSEP, and Nivenly's October/November update has the current status of FSEP:

Unfortunately due to a few factors, including the unexpected passing of our founder Kris Nóva days after the release of the product requirements document as well as the author and original maintainer Ro needing to take a step back due to a torrent of racism that he received over The Bad Space, the originally planned Q&A that was supposed to happen shortly after FSEP was published did not have the opportunity to happen.... The status of this project is on hold, pending the return of either the original maintainer or a handoff to a new one.

9 From the FSEP document:

- Allowing blocklists to be automatically imported during onboarding dramatically reduces the opportunity to be exposed to harmful content unnecessarily.

- The ability to request an updated blocklist or automate the process to check on its own periodically.

- Expand blocklist management by listing why a site is blocked, access to available examples, and when the site was last updated.

These all address points Shepherd characterizes in The Hell of Shared Blocklists as "vitally important to mitigate harms".

Of course, if Mastodon had better support for allow-list federation, then starting off with a minimal allow-list would mean that an initial blocklist doesn't need to be imported. But it doesn't, and based on a recent discussion with Mastodon CTO Renaud Chaput that's unlikely to change.

10 For example: The Bad Space mid-September bug resulted in instances getting listed on The Bad Space if even one of sources had taken action against them; the correct behavior is that only sources with two or more actions should be listed. So the similarity of the bug's effect on girlcock.club and tech.lgbt with the issue with Oliphant's now-discontinued unified max list Seirdy discusses in Mistakes made reinforces that blocklist "consensus" algorithms that only require a single source are likely to affect sex-positive queer space so should be avoided.

11 Why am I so convinced that it was a false accusation? For one thing, the accuser admitted it: "I fucked up." Also, facts are facts. Nobody had been fired. Two volunteers had left the Tusky team, and both of them said that it was unrelated to the Black trans person's comment.

The false accuser says it wasn't intentional. They just happened to stumble upon a post by a Black trans person who had blocked them, flipped out (because tech.lgbt was being held accountable for the post I mentioned above where their moderator had ignored crucial details and added credibility to a racist harassment against a Black person), and thought the situation was so urgent that they needed to make a post that (in their own description) "rushed to conclusions" and "speculated on what I couldn't see under the hood" and was wrong.